How to Install and Configure Minikube on Ubuntu

Introduction

This article will demonstrate how to install and configure Minikube to set up a small Kubernetes cluster. We will then examine Kubernetes in more detail to apply that knowledge to a real-world example.

What is Minikube?

To configure and run Kubernetes, we need at least 1 Master server and 2 Workers servers. Typically, local programs such as Minikube are used for learning, training, and testing. Minikube is a tool that makes it simple to run a local Kubernetes cluster. Minikube launches a single-node Kubernetes cluster inside a virtual machine. We will be using Minikube. Be sure to install and configure it using How to Install Minikube on CentOS, Mac, and Windows. In this tutorial, we will be using Ubuntu 18.04 to demonstrate the examples running one local cluster.

What is Kubernetes?

Kubernetes is an open-source platform used for managing containerized workloads and service automation. Many businesses are moving to a container-based system to save resources, management costs, and ease of use. Kubernetes simplifies many manual processes using containers in which we can run our applications. Some of the benefits of Kubernetes include:

- Service monitoring and load balancing.

- Storage management.

- Automatic container deployment.

- Automatic container recovery and restart.

- Automatic load distribution between containers.

- The self-control of containers.

- Confidential information and configuration management.

It is also important to note that Kubernetes itself is not an end-to-end Platform as a Service, but it can be a foundation on which a PaaS is developed. Because Kubernetes operates at the container level and not the hardware level, it can quickly deploy, scale, load balance, and monitor containers.

Create a Cluster

Let's start Minikube and create a cluster.

root@host:~# minikube start

😄 minikube v1.13.1 on Ubuntu 18.04

✨ Using the docker driver based on user configuration

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

💾 Downloading Kubernetes v1.19.2 preload ...

> preloaded-images-k8s-v6-v1.19.2-docker-overlay2-amd64.tar.lz4: 486.36 MiB

🔥 Creating docker container (CPUs=2, Memory=2200MB) ...

🐳 Preparing Kubernetes v1.19.2 on Docker 19.03.8 ...

🔎 Verifying Kubernetes components...

🌟 Enabled addons: default-storageclass, storage-provisioner

🏄 Done! kubectl is now configured to use "minikube" by default

root@host:~#This command creates and configures a container that runs a single-node Kubernetes cluster. This command also configures kubectl to communicate with this cluster. We can see that Minikube has downloaded and installed Kubernetes v1.19.

Once minikube has arranged everything for us to work with K8s, we can interact with the cluster using kubectl.

Cluster Information

We can obtain information about the cluster using this command.

root@host:~# kubectl cluster-info

Kubernetes master is running at https://172.17.0.3:8443

KubeDNS is running at https://172.17.0.3:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

root@host:~# Diagnose Cluster

To further debug and diagnose cluster problems, use this command.

root@host:~# kubectl cluster-info dumpReview Nodes

Now, let’s take a look at nodes in the cluster.

root@host:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready master 2m20s v1.19.2

root@host:~# This command shows all the nodes that are used to host our applications. We have one node, and we see that it is ready to accept deployment requests.

Deploy Cluster

We will now create a Kubernetes deployment named hello-minikube using an existing image named echoserver, which is a simple HTTP server with the port set 8080 using the --port flag in our command.

root@host:~# kubectl create deployment hello-minikube --image=k8s.gcr.io/echoserver:1.10

deployment.apps/hello-minikube created

root@host:~# Now, to access the deployment, enter the following command.

Note: The --type=NodePort flag specifies the type of the Service.

root@host:~# kubectl expose deployment hello-minikube --type=NodePort --port=8080

service/hello-minikube exposed

root@host:~#Verify Deployment

To verify the instance deployment, run this command.

root@host:~# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

hello-minikube 1/1 1 1 79s

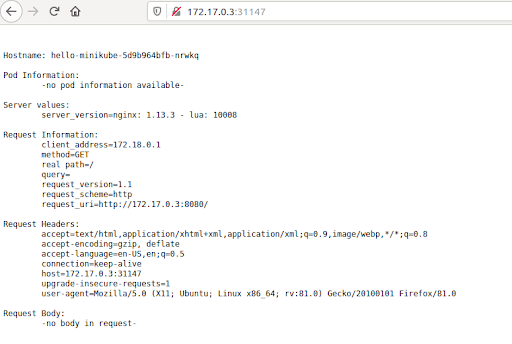

root@host:~# Next, enter this URL into our browser: http://172.17.0.3:8080/.

We locate this URL from the output line: request_uri=http://172.17.0.3:8080/ as seen below.

Hostname: hello-minikube-5d9b964bfb-nrwkq

Pod Information:

-no pod information available-

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=172.18.0.1

method=GET

real path=/

query=

request_version=1.1

request_scheme=http

request_uri=http://172.17.0.3:8080/

Request Headers:

accept=text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8

accept-encoding=gzip, deflate

accept-language=en-US,en;q=0.5

connection=keep-alive

host=172.17.0.3:31147

upgrade-insecure-requests=1

user-agent=Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:81.0) Gecko/20100101 Firefox/81.0

Request Body:

-no body in request-This data provides the details of the local cluster, as displayed in the screenshot below.

Delete Application

Next, we will delete our application. To remove hello-minikube, run this command.

root@host:~# kubectl delete services hello-minikube

service "hello-minikube" deleted

root@host:~# Delete Deployment

We can delete the deployment using this command.

root@host:~# kubectl delete deployment hello-minikube

deployment.apps "hello-minikube" deleted

root@host:~# Stop Cluster

To stop the cluster, simply run the command below.

root@host:~# minikube stop

✋ Stopping node "minikube" ...

🛑 Powering off "minikube" via SSH ...

🛑 1 nodes stopped.

root@host:~# Although this command stopped the cluster, it saves the state and data of the cluster. Restarting will return it to the same condition when stopped.

Delete Cluster

To delete the cluster, run this command.

root@host:~# minikube delete

🔥 Deleting "minikube" in docker ...

🔥 Deleting container "minikube" ...

🔥 Removing /home/margo/.minikube/machines/minikube ...

💀 Removed all traces of the "minikube" cluster.

root@host:~# This command deletes all the cluster data as well as the state.

Important Kubernetes Basics

Kubernetes clusters can be deployed in a physical, virtual, or hybrid group of machines, consisting of a minimum of three nodes. It ensures that containers run when and where we need them and helps allocate the resources required to run smoothly.

Developers must update their applications to maintain stability. Containerization helps developers release and update applications easily, limiting downtime.

What is a Kubernetes Cluster?

A cluster is a set of nodes that run containerized applications. Kubernetes allows us to deploy containerized applications in a cluster without binding them to specific devices. These containers are more flexible, require fewer resources, and are more affordable than previous deployment models.

Previously, applications were installed directly onto distinct servers that were deeply integrated into the host. Kubernetes automates this process more efficiently by allocating and scheduling application containers within a cluster. A cluster consists of two types of resources:

- Master: This resource coordinates the cluster.

- Node: Nodes are workers that run applications.

The Master is responsible for cluster management and coordinates all activities in a cluster, such as:

- Application scheduling.

- Maintaining the desired application state.

- Application scaling.

- Applications deployment.

- New updates deployment.

What is a Node?

A Node is a physical computer or virtual machine that serves as a working computer within a Kubernetes cluster. Each node contains Kubelets, which are agents for managing the node and interacting with the Kubernetes Master. These nodes have tools to handle containerized operations.

When we move applications to Kubernetes, it tells the master to run the application containers. The master schedules the containers to run on the cluster nodes. The nodes communicate with the master using the Kubernetes API, which the master provides.

In the previous Minikube example, we use the minikube start command to launch a cluster. This created a container space and started a cluster within that space. To gather information about the cluster, we use the kubectl cluster-info command.

Get Node Information

To get information about the nodes, we used this command.

root@host:~# kubectl get nodes.What is the Kubernetes API?

Let's consider another important aspect of the Kubernetes API. The Kubernetes API is an application that serves Kubernetes functionality through RESTful (Representational State Transfer), which stores the state of the cluster. Kubernetes's resources and “records of intent” are kept as API objects and modified using RESTful to API calls.

This allows us to manage the configuration declaratively (this is a programming paradigm in which the specification of problem-solving and the expected result is set.). Therefore, they use a tool such as kubectl in which they communicate with this API. It is possible to speak to API directly, but this is not so convenient, and there is no possibility of extending commands to interact with the API.

Application Deployment

Once we have one working cluster, we can deploy our containerized applications to it. For this, we create a configuration for deployment. The design describes how to create and update our application. When we have created the layout, the Master schedules to run the instances of the applications included in this deployment on individual nodes in the cluster.

After you've created the application instances, the Kubernetes controller continuously monitors those instances and their state. If the node hosting the instances goes down or is removed, the deployment controller replaces the instance on a different node in the cluster. This provides a self-healing mechanism for troubleshooting or maintaining the machine.

Many programs were previously used to deploy and launch applications, but they did not allow monitoring the state of the machine and recovering it from failures to a fully working application.

Earlier, we have already used this command.

root@host:~# kubectl create deployment hello-minikube -We deployed our application by creating a deployment.

This command performs the following functions:

- Looks for a suitable node on which to run an instance of the application (we only had one available node).

- Schedules application launch on this node.

- Configures the cluster to reschedule the instance on the new node if necessary.

Next, we entered the following command to check the list of our deployments and their state.

root@host:~# kubectl get deploymentsThe vital point to note is that pods running inside Kubernetes run within an isolated network. They are visible from other Pods and services in the same cluster by default, but not outside this network. Therefore, it is essential to know this and schedule some pods to go online both inside and out.

What is a Pod?

When we deployed our application, Kubernetes created a Pod to host an instance of our application. A Pod is a grouping of one or more containers for an application that shares those containers' resources. These resources consist of:

- Shared storage as Volumes.

- Network as a unique cluster IP address.

- Information on how to start each container, version of the container image, or the specific ports to use.

A Pod is a basic unit on a Kubernetes platform. When we create a deployment in Kubernetes, this deployment creates Pods with containers inside them. Each pod is bound to the host. It is attached to and remains there until completion according to a restart or uninstall policy.

The Pod simulates the "logical host" for a particular application and can contain various containerized applications that are closely related to each other. The containers in the Pod share IP addresses and port space. They are always co-located and scheduled to run on the same node.

In the event of a node failure, identical Pods are scheduled on other available nodes in the cluster. A Pod always runs on a node, and every node runs:

- A kubelet process responsible for communication between the Kubernetes Master and Node; it manages modules and containers running on the machine.

- The runtime container is responsible for retrieving the container image from the registry, unpacking it, and launching the application.

It is essential to keep track of all this and configure it for correct operation. The most common commands can be executed with kubectl:

- kubectl get: Lists available resources

- kubectl describe: Show detailed information about a resource

- kubectl logs: Prints logs from a container in a module

- kubectl exec: Execute a command on a container in a pod.

Kubernetes Lifecycle

Kubernetes Pods have a lifecycle. When a worker node dies, the modules running on the node also die. The RepliceSet (which maintains a stable set of Pod replicas running at any given time and is often used to ensure a certain number of identical modules are available) can dynamically return the cluster to the previous state by creating new modules to keep our application running.

These replicas can be exchanged. The front-end system doesn't care about back-end replicas or even about the Pod being lost and re-created. However, every module in a Kubernetes cluster has a unique IP address, even if the modules are on the same node, so there must be a way to automatically reconcile changes between modules so that our applications can continue to run smoothly.

What is a Kubernetes Service?

A Kubernetes service is an abstraction that defines a logical set of Pods and a policy for accessing them. Services provide loose coupling between dependent modules. A service is defined using YAML or JSON like all Kubernetes objects.

Although each module has a unique IP address, it is not displayed outside the cluster without a service. Services allow our applications to receive traffic. We can provide these services in different ways by specifying the type in ServiceSpec:

- ClusterIP: A clusterIP provides service on the internal IP address in the cluster. This makes the service available only within the cluster.

- NodePort: A node port uses NAT to provide service on the same port of every node in the cluster. It allows a service to be accessible from outside the cluster.

- LoadBalancer: Creates a load balancer in the current cloud (if supported) and assigns a fixed external IP address to the Service.

- ExternalName: Provides a service using an arbitrary name by returning a CNAME record with the name.

Services and Labels

Services map to a set of modules using labels and selectors to perform logical operations on Kubernetes objects. Labels are key/value pairs attached to objects that can be used in multiple ways:

- Assign objects for development, testing, and production.

- Embed version tags.

- Classify an object using tags.

Labels can also be attached to objects during creation or later and can be replaced at any time.

Scaling Applications

There is an essential aspect of scaling. We've already created a cluster and module to run our application. With increasing traffic, we need to scale the application to withstand users' load and not break down. Scaling is done by changing the number of replicas in a deployment. It will guarantee that new modules are created and scheduled for nodes with available resources. Scaling will increase the number of modules to a state that supports our application load.

Kubernetes Auto-scaling

Kubernetes also supports the auto-scaling of Pods, but this is a somewhat complicated process. There is also an option to scale to zero, in which it will terminate all modules of the specified deployment. To run multiple instances of an application, we need a way to distribute traffic between them. Services have a built-in load balancer that distributes network traffic among all available deployment modules. Kubernetes services will continuously monitor running Pods with endpoints to ensure that traffic is only sent to available Pods.

Updating Applications

Both developers and users expect the app to be continuously updated. Kubernetes does this with rolling updates. Rolling updates enable deployments to be updated with zero downtime by gradually updating Pod instances with new ones. New modules are scheduled for nodes with available resources.

By default, the maximum number of modules that can be unavailable during the update, and the maximum number of new modules that can be created is one to one. Both parameters can be set to either numbers or percentages (for modules). In Kubernetes, updates are versioned, and any update can be reverted to the previous stable version.

Similar to scaling an application, if the deployment is open to everyone, the service will load balance traffic only on the available modules during the update. An accessible Pod is an instance available to application users. Rolling updates allow us to:

- Promote applications from one environment to another through container image updates.

- Roll back to previous versions.

- Continuous integration and continuous application delivery with zero downtime.

Create an Application in Kubernetes

After analyzing the basics of Kubernetes, we can use Minikube to run a sample application in Kubernetes. Above, we ran the hello-minikube deployment using the existing image. Now we will launch a small Node.js ready-made container image. Let's show what's inside this image. There are two files here:

Dockerfile

FROM node:6.14.2

EXPOSE 8080

COPY server.js .

CMD [ "node", "server.js" ]The second file in a js format:

server.js

var http = require('http');

var handleRequest = function(request, response) {

console.log('Get request received URL ' + request.url);

response.writeHead(200);

response.end('Hello World!');

};

var www = http.createServer(handleRequest);

www.listen(8080)If more information is needed on how to use Docker to build an image, use our Docker for Beginners tutorial.

As in the previous example, we go into our console and run this command.

root@host:~# minikube start

😄 minikube v1.13.1 on Ubuntu 18.04

✨ Using the docker driver based on user configuration

👍 Starting control plane node minikube in cluster minikube

🔥 Creating docker container (CPUs=2, Memory=2200MB) ...

🐳 Preparing Kubernetes v1.19.2 on Docker 19.03.8 ...

🔎 Verifying Kubernetes components...

🌟 Enabled addons: default-storageclass, storage-provisioner

🏄 Done! kubectl is now configured to use "minikube" by default

root@host:~# Next, enter the following command.

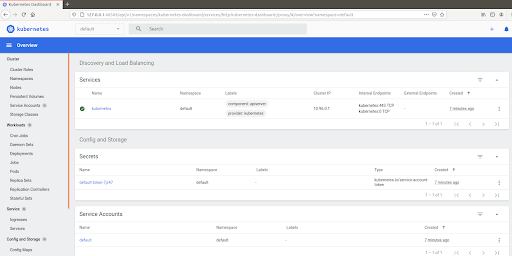

root@host:~# minikube dashboard

🔌 Enabling dashboard ...

🤔 Verifying dashboard health ...

🚀 Launching proxy ...

🤔 Verifying proxy health ...

🎉 Opening http://127.0.0.1:37797/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/ in our default browser. This command will open the Kubernetes dashboard in a browser. It is a handy tool for reviewing the status, metrics, and different types of logs. The dashboard makes it easier to visualize many of the functions available in minikube, although many think Kubernetes is easier to interact with via the terminal.

This is how the dashboard looks. To return to the console, press Ctrl + C.

Create a Deployment

A Kubernetes Pod is a group of one or more containers linked together for administration and networking purposes. The Pod in this tutorial only has one container. Deploying Kubernetes checks our module's health and restarts its container if it exists. Deployment is the recommended way to manage module creation and scaling.

We use the command to create a deployment that controls the module. The pod launches a container based on the provided Docker image.

root@host:~# kubectl create deployment hello-node --image=k8s.gcr.io/echoserver:1.4

deployment.apps/hello-node created

root@host:~# Deployment Status

Let's see whether it is deployed and what its status is.

root@host:~# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 0/1 1 0 24s

root@host:~# We see in the READY 0/1 column; this means that it is not yet ready. Wait 30 seconds to a couple of minutes and then check it using this command.

root@host:~# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 82s

root@host:~# Check Cluster Events

root@host:~# kubectl get events

LAST SEEN TYPE REASON OBJECT MESSAGE

2m2s Normal Scheduled pod/hello-node-7567d9fdc9-z994l Successfully assigned default/hello-node-7567d9fdc9-z994l to minikube

2m1s Normal Pulling pod/hello-node-7567d9fdc9-z994l Pulling image "k8s.gcr.io/echoserver:1.4"

74s Normal Pulled pod/hello-node-7567d9fdc9-z994l Successfully pulled image "k8s.gcr.io/echoserver:1.4" in 47.778693004s

73s Normal Created pod/hello-node-7567d9fdc9-z994l Created container echoserver

73s Normal Started pod/hello-node-7567d9fdc9-z994l Started container echoserver

2m2s Normal SuccessfulCreate replicaset/hello-node-7567d9fdc9 Created pod: hello-node-7567d9fdc9-z994l

2m2s Normal ScalingReplicaSet deployment/hello-node Scaled up replica set hello-node-7567d9fdc9 to 1

16m Normal NodeHasSufficientMemory node/minikube Node minikube status is now: NodeHasSufficientMemory

16m Normal NodeHasNoDiskPressure node/minikube Node minikube status is now: NodeHasNoDiskPressure

16m Normal NodeHasSufficientPID node/minikube Node minikube status is now: NodeHasSufficientPID

15m Normal Starting node/minikube Starting kubelet.

15m Normal NodeHasSufficientMemory node/minikube Node minikube status is now: NodeHasSufficientMemory

15m Normal NodeHasNoDiskPressure node/minikube Node minikube status is now: NodeHasNoDiskPressure

15m Normal NodeHasSufficientPID node/minikube Node minikube status is now: NodeHasSufficientPID

15m Normal NodeAllocatableEnforced node/minikube Updated Node Allocatable limit across pods

15m Normal NodeReady node/minikube Node minikube status is now: NodeReady

15m Normal RegisteredNode node/minikube Node minikube event: Registered Node minikube in Controller

15m Normal Starting node/minikube Starting kube-proxy.

root@host:~# To verify a kubectl configuration, we run the following command.

root@host:~# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority: /home/root/.minikube/ca.crt

server: https://172.17.0.3:8443

name: minikube

contexts:

- context:

cluster: minikube

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user:

client-certificate: /home/margo/.minikube/profiles/minikube/client.crt

client-key: /home/root/.minikube/profiles/minikube/client.key

root@host:~# By default, the Pod is only accessible via the internal IP address in the Kubernetes cluster. To make the hello-node container available from outside the Kubernetes virtual network, we must expose the Pod as a Kubernetes service. We will accomplish this using the following command.

root@host:~# kubectl expose deployment hello-node --type=LoadBalancer --port=8080

service/hello-node exposed

root@host:~#Note. The "type = LoadBalancer" is a service supported by external cloud providers. To check the service we just created, run this command.

root@host:~# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-node LoadBalancer 10.98.254.176 <pending> 8080:30943/TCP 87s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20m

root@host:~#Load Balancing

For cloud providers that support load balancing, an external IP address will be provided to access the Service. The Minikube LoadBalancer type makes the Service available through the minikube service command.

Run this command.

root@host:~# minikube service hello-node

|-----------|------------|-------------|-------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|-----------|------------|-------------|-------------------------|

| default | hello-node | 8080 | http://172.17.0.3:30943 |

|-----------|------------|-------------|-------------------------|

🎉 Opening service default/hello-node in default browser...

root@host:~# This opens a browser window that serves our application and shows the application's response.

Minikube Addons

Next, here is a nice little feature many find handy to locate addons. Enter the following command to review the available addons.

root@host:~# minikube addons list

|-----------------------------|----------|--------------|

| ADDON NAME | PROFILE | STATUS |

|-----------------------------|----------|--------------|

| ambassador | minikube | disabled |

| csi-hostpath-driver | minikube | disabled |

| dashboard | minikube | enabled ✅ |

| default-storageclass | minikube | enabled ✅ |

| efk | minikube | disabled |

| freshpod | minikube | disabled |

| gcp-auth | minikube | disabled |

| gvisor | minikube | disabled |

| helm-tiller | minikube | disabled |

| ingress | minikube | disabled |

| ingress-dns | minikube | disabled |

| istio | minikube | disabled |

| istio-provisioner | minikube | disabled |

| kubevirt | minikube | disabled |

| logviewer | minikube | disabled |

| metallb | minikube | disabled |

| metrics-server | minikube | disabled |

| nvidia-driver-installer | minikube | disabled |

| nvidia-gpu-device-plugin | minikube | disabled |

| olm | minikube | disabled |

| pod-security-policy | minikube | disabled |

| registry | minikube | disabled |

| registry-aliases | minikube | disabled |

| registry-creds | minikube | disabled |

| storage-provisioner | minikube | enabled ✅ |

| storage-provisioner-gluster | minikube | disabled |

| volumesnapshots | minikube | disabled |

|-----------------------------|----------|--------------|

root@host:~#This command displays a list of currently supported add-ons.

Minikube Built-in Addons

Minikube has a set of built-in add-ons (resources that extend Kubernetes functionality) to enable, disable, and open locally. To enable the addons, use this command.

root@host:~# minikube addons enable metrics-server

🌟 The 'metrics-server' addon is enabled

root@host:~#Now let's see the newly created service and, of course, the Pod for it.

root@host:~# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-f9fd979d6-ldt7v 1/1 Running 0 27m

pod/etcd-minikube 1/1 Running 0 27m

pod/kube-apiserver-minikube 1/1 Running 0 27m

pod/kube-controller-manager-minikube 1/1 Running 0 27m

pod/kube-proxy-p5bpt 1/1 Running 0 27m

pod/kube-scheduler-minikube 1/1 Running 0 27m

pod/metrics-server-d9b576748-ncpks 1/1 Running 0 38s

pod/storage-provisioner 1/1 Running 0 27m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 27m

service/metrics-server ClusterIP 10.100.101.34 <none> 443/TCP 38s

root@host:~# Now we will disable the add-on almost in the same way.

root@host:~# minikube addons disable metrics-server

🌑 "The 'metrics-server' addon is disabled

root@host:~# Verify Addon Removal

To check that the service has gone, run this command.

root@host:~# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-f9fd979d6-ldt7v 1/1 Running 0 28m

pod/etcd-minikube 1/1 Running 0 28m

pod/kube-apiserver-minikube 1/1 Running 0 28m

pod/kube-controller-manager-minikube 1/1 Running 0 28m

pod/kube-proxy-p5bpt 1/1 Running 0 28m

pod/kube-scheduler-minikube 1/1 Running 0 28m

pod/storage-provisioner 1/1 Running 0 28m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 28m

root@host:~# Clean Up

Now it's time to clean up all the resources that we have created.

Remove Service

To remove the service, run this command.

root@host:~# kubectl delete service hello-node

service "hello-node" deleted

root@host:~#Remove Deployment

To remove the deployment, run this command.

root@host:~# kubectl delete deployment hello-node

deployment.apps "hello-node" deleted

root@host:~# Stop Container

To stop the Minikube container, run this command.

root@host:~# minikube stop

✋ Stopping node "minikube" ...

🛑 Powering off "minikube" via SSH ...

🛑 1 nodes stopped.

root@host:~# Remove Container

Lastly, to remove the container and cluster in Minikube, run this command.

root@host:~# minikube delete

🔥 Deleting "minikube" in docker ...

🔥 Deleting container "minikube" ...

🔥 Removing /home/margo/.minikube/machines/minikube ...

💀 Removed all traces of the "minikube" cluster.

root@host:~# Conclusion

We hope this information has provided a more in-depth understanding of one of the most in-demand technologies in recent years in the field of DevOps. In this tutorial, we discussed installing minikube and using it to manipulate a local Kubernetes cluster.

Related Articles:

About the Author: Margaret Fitzgerald

Margaret Fitzgerald previously wrote for Liquid Web.

Our Sales and Support teams are available 24 hours by phone or e-mail to assist.

Latest Articles

How to use kill commands in Linux

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange the root password in WebHost Manager (WHM)

Read Article