Large language models tutorial — natural language database queries

If you have been following technology news over the last twelve months, you have heard about large language models (LLMs) like GPT and LaMDA several times. Chatbots like ChatGPT, Claude 2, and Google Bard are powered by these LLMs. Over the last year, large language models have completely transformed workflows in many industries, including software development and database management.

When it comes to database management, data analysts can utilize tools like ChatGPT and other products trained with large language models to improve the querying process significantly. With these tools, you only need to input a prompt in your natural language, and they will assist you in converting it into a query understandable by any database management software.

In this large language models tutorial, we will explore how to leverage the power of LLMs to enhance your effectiveness and efficiency when querying databases. But before we dive into that, let's begin with an overview of large language models.

An overview and tutorial — large language models (LLMs)

LLMs are a type of artificial intelligence (AI) algorithm that leverages deep learning techniques and vast datasets to comprehend, summarize, generate, and predict new content. They are closely associated with the broader category of generative AI, specifically engineered to facilitate text-based content creation.

All language models undergo an initial training phase using a specific dataset. Subsequently, they employ various techniques to create relationships and generate fresh content based on the knowledge acquired during training. LLMs find widespread application in the field of natural language processing (NLP), where users input queries in natural language to obtain meaningful results. This application also applies to database management, where users can query databases using their natural language.

Large language model examples

Several big tech companies and startups, including Google, OpenAI, and Meta have been developing large language models over the years. Some of the popular large language model examples include the following:

- GPT-4: GPT-4 is an advanced, multilingual large language model created by OpenAI that excels in creative tasks, including music and screenplays, with solid accuracy in English and other languages.

- LaMDA:LaMDA, developed by Google, is a conversational large language model family designed for natural language understanding.

- LLaMA (Large Language Model Meta AI):LLaMA, introduced by Meta AI, is a family of large language models focusing on various AI applications.

- BERT (Bidirectional Encoder Representations from Transformers): BERT, created by Google, is known for bidirectional pre-training and excels in a wide range of natural language processing tasks.

- Turing-NLG:Turing-NLG, developed by Microsoft, specializes in generating conversational responses and performs well in chatbot applications.

Use cases in database querying

Some of the popular use cases in database querying include the following:

- Customer Support & Chatbots: Natural language database queries empower chatbots to comprehend and respond to customer inquiries effectively. This functionality is critical in enhancing customer support by providing instant, round-the-clock assistance. Businesses can address common issues and frequently asked questions without human intervention, leading to improved customer satisfaction and operational efficiency.

- Data Analysis & Reporting: Natural language queries facilitate rapid data analysis and reporting for analysts and business users. This approach eliminates the need for extensive knowledge of SQL and data analysis tools, enabling users to extract valuable insights from large datasets. This user-friendly method of querying databases enhances decision-making processes, allowing businesses to make informed choices based on real-time data analysis.

- Data Filtering & Modification: Natural language commands allow users to apply filters and conditions seamlessly translated into SQL statements for precise data retrieval. Furthermore, users can utilize natural language to insert, update, or delete records in a database, while the SQL engine ensures data integrity. This combined approach simplifies data manipulation tasks and ensures data accuracy and consistency.

- Data Backup & Restore: Natural language database queries simplify the often complex data backup and restoration processes. Users can instruct the database to perform these critical tasks using natural language, and the system translates these instructions into SQL commands. This activity streamlines data preservation and retrieval, ensuring that companies can recover all the data during unexpected incidents, such as system failures or data loss.

- User Access Control with SQL: Natural language is employed to manage user access and permissions within the database, enhancing data security and ensuring compliance with SQL-based role and privilege assignments. This use case is particularly important in controlling who can access and modify sensitive data, providing a robust layer of protection against unauthorized access and data breaches.

- Data Validation & Integrity Checks: Natural language queries encompass instructions for data validation and integrity checks, prompting the database to enforce predefined constraints and rules specified in SQL. By using natural language for these checks, users can easily ensure that the data remains accurate, consistent, and compliant with business rules and industry standards.

A look at a large language models tutorial and some examples for natural language database queries

In this section of our large language models tutorial, we will explore how to query data from a database using natural language and have the large language model write the SQL query for you. To demonstrate this, we will utilize a database table that presents information about employees in a specific company:

| Employee ID | First Name | Last Name | Date of Birth | Department | Salary |

| 001 | John | Smith | 1985-03-15 | HR | 55000 |

| 002 | Sarah | Johnson | 1990-07-22 | Marketing | 60000 |

| 003 | Michael | Brown | 1982-12-10 | Finance | 65000 |

| 004 | Emily | Davis | 1995-05-28 | IT | 70000 |

| 005 | David | Lee | 1988-09-02 | Sales | 58000 |

We will subsequently employ ChatGPT, which uses the GPT-3.5 large language model, to generate our SQL queries. Here are some samples of queries you can write in your natural language that ChatGPT can help you convert into SQL queries that your database software understands.

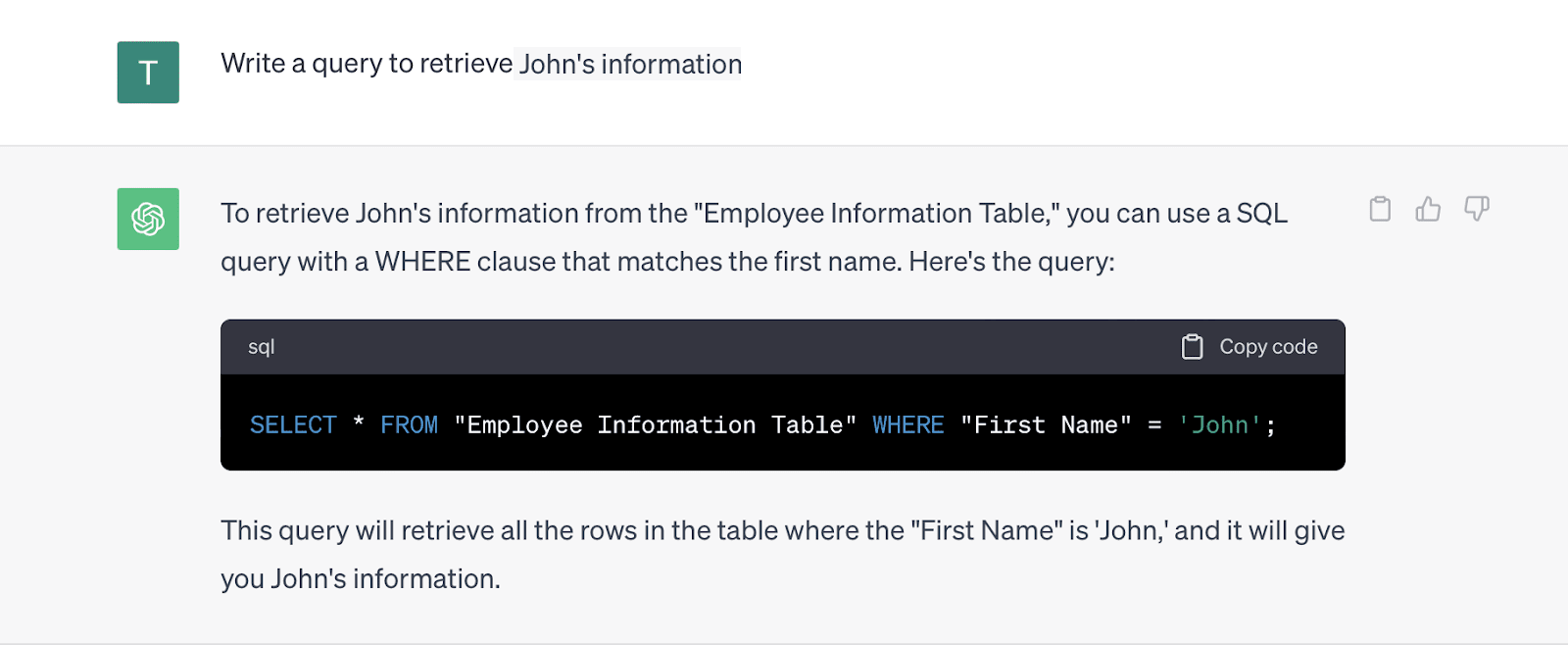

Sample #1 — finding specific employee data

Here is a a sample query to retrieve data of specific employee:

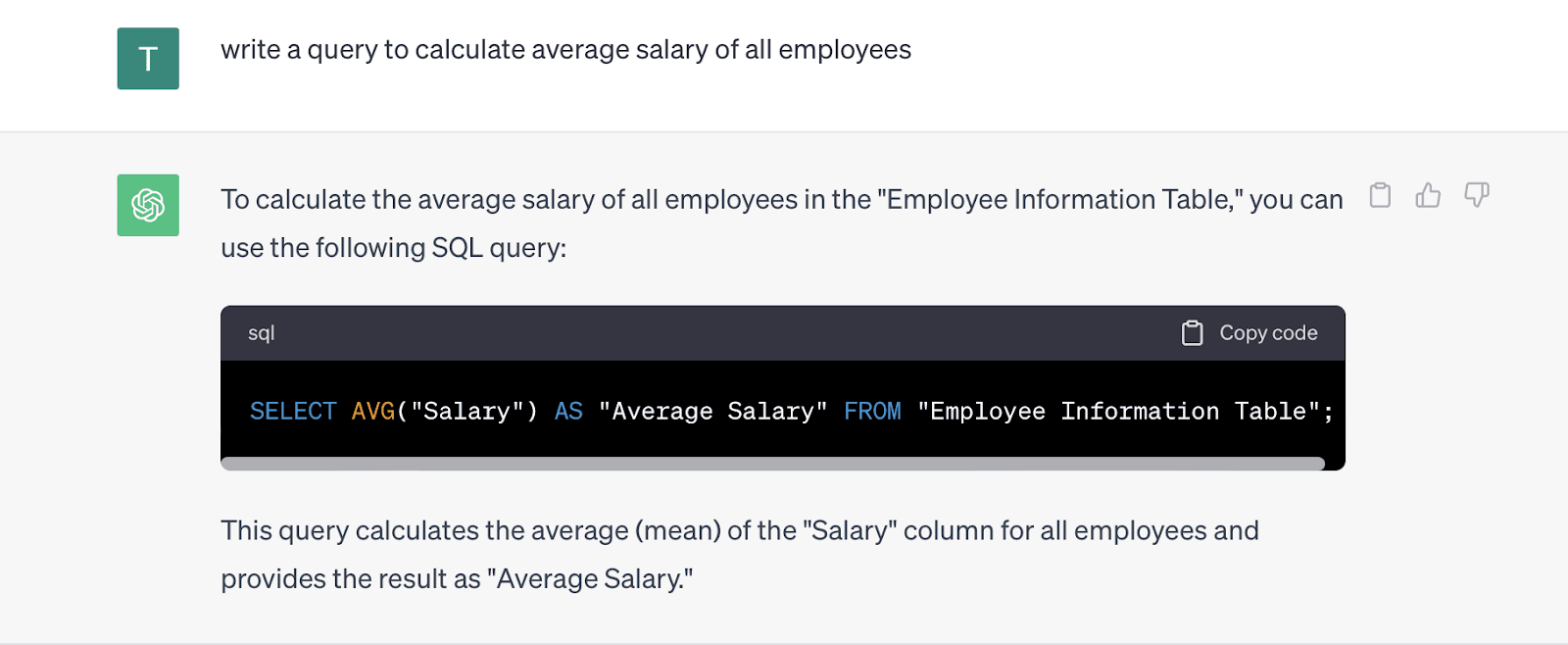

Sample #2 — finding average salary data of all employees

Below find a sample query used to calculate the average salary of all employees:

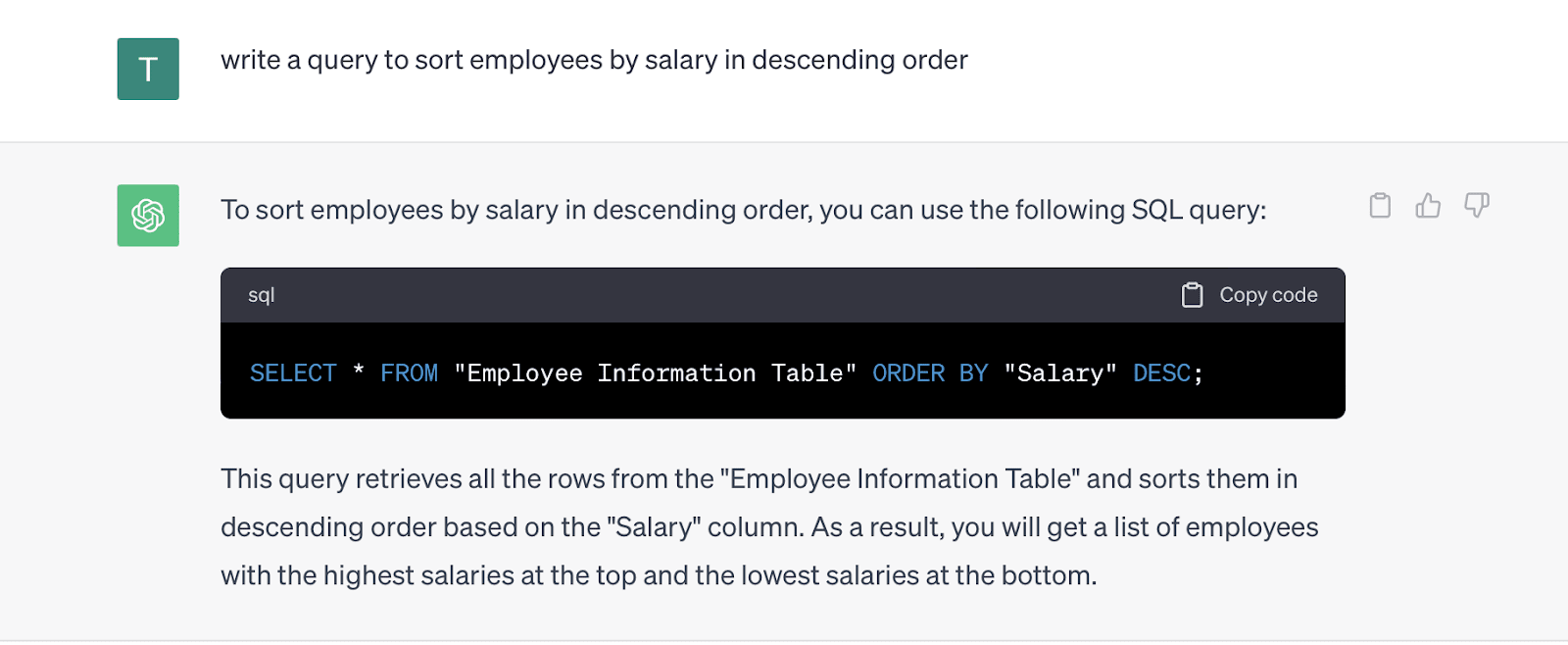

Sample #3 — sorting employees using salary data

You can also use a query similar to the one below to sort employees by salary in descending order:

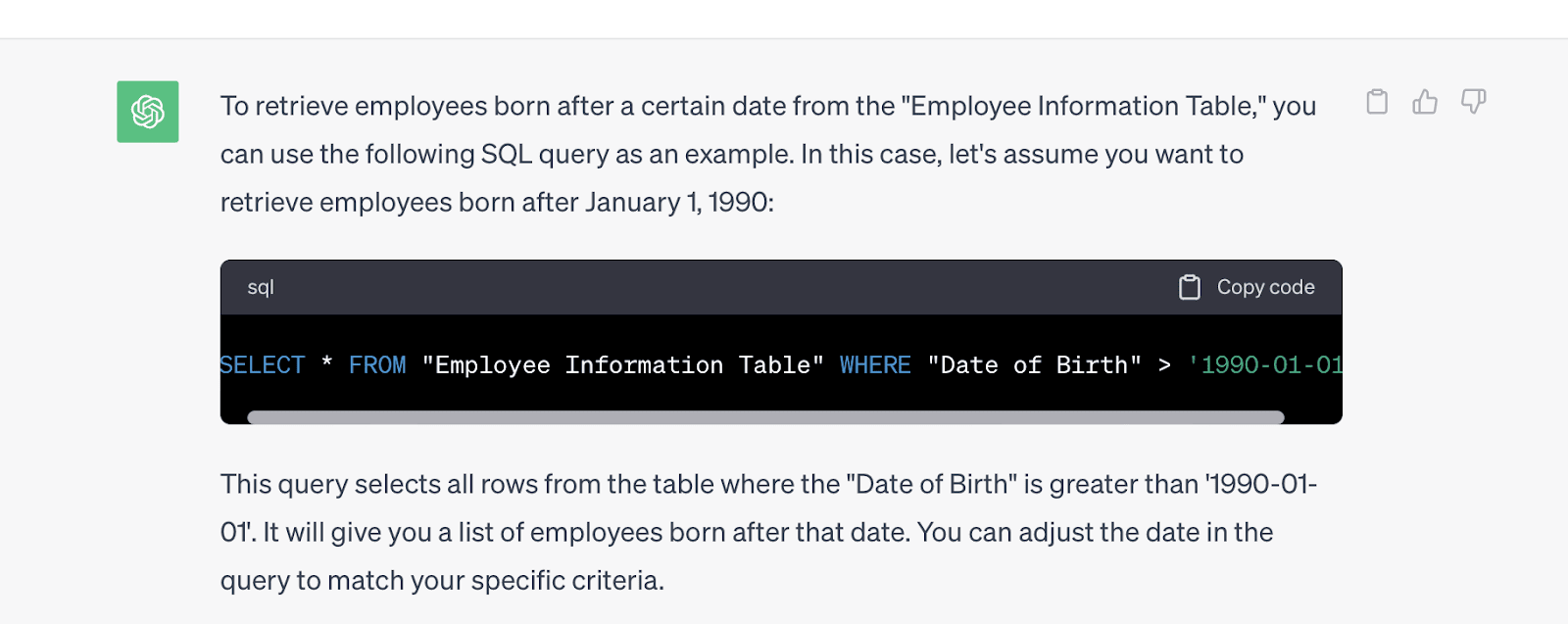

Sample #4 — fetching employee data using date of birth

The following sample query will retrieve all employees born after the specified data of birth value used:

Describing the data in your database

When using large language models like OpenAI’s GPT, you must describe your table in detail to get the most accurate output. Here are some of the different ways to outline the details of your database for the LLM.

Providing context to large language models

This context typically includes information about the structure of your database, such as the names of columns, the type of data they contain, and examples of the data. One way to provide context is by pasting the entire table into the LLM's input and instructing it to use the data to generate the specific queries you need

If you're using OpenAI's GPT-4 or a similar advanced LLM, you also have the option to provide a screenshot of your table as input. The model can then interpret the contents of the screenshot, including the table's structure and data.

Developing custom applications

For more complex databases or when you require a more integrated solution, you can develop a custom application that interacts with OpenAI's GPT-4 or other LLMs APIs using LongChain. LongChain is a framework for developing apps that interact with large language models. With such applications, you can input your natural language queries, and the application will use the LLM to generate the corresponding SQL query.

In the background, the application can subsequently execute the SQL query on the database and provide the results. For enhanced scalability, you have the option to develop and deploy your application using reliable cloud server providers like Liquid Web. These providers enable you to construct and launch your application while utilizing precise resource levels, resulting in cost savings without compromising performance.

Best practices for using LLMs to write SQL queries

For the best results, follow these best practices when writing SQL queries using large language models:

- Provide Relevant Context: Lay out the tables and columns involved in the query, define relationships between tables, specify the type of database you're using, and provide sample data if possible. Your LLM needs to clearly understand the environment for more accurate query generation.

- Simplify the Problem: Once you have established context, simplify the SQL problem by breaking it down to its simplest form. Distill the requirements and eliminate any unnecessary details.

- Be Explicit About Constraints & Desired Output: Specify any constraints that should be applied to the query, including filters, ordering requirements, and aggregations. For example, for optimal performance avoid full table scans.

- Iterate on Your Query: It's essential to understand that the initial query generated may not be perfect. Expect to iterate on your query by refining it, asking for adjustments, and running it multiple times. This iterative process allows you to fine-tune the query until it meets your exact needs.

- Fact-Checking: Before using LLMs to generate queries, knowing and understanding how to use SQL is crucial. One of the shortcomings of LLMs is that they can at times, make errors. In such cases, you must apply your SQL knowledge to fact-check the query before implementing it.

Final thoughts

In summary, using large language models to write queries for database management can significantly enhance the effectiveness and efficiency of data analysts. This large language models tutorial has shared procedures and best practices for optimizing the use of LLMs as a data analyst.

You can directly use LLM chatbots such as ChatGPT or Claude 2 or Google Bard to generate queries by providing text or image inputs. For greater efficiency, you can consider building a simple app using LongChain that enables database querying through natural language inputs.

If you are ready to leverage the power of LLMs for querying your databases, you can make use of Liquid Web's database hosting service. Liquid Web provides cost-effective and scalable hosting solutions, enabling your business to expand according to your requirements without imposing a significant financial strain. WordPress-hosted websites can also utilize this service for more scalability, especially if the database is the bottleneck.

Related Articles:

About the Author: Chika Ibeneme

Chika Ibeneme is a Community Support Agent at The Events Calendar. He received his BA in Computer Science in 2017 from Northern Caribbean University and has over 5 years of technical experience assisting customers and clients. You can find him working on various WordPress and Shopify projects.

Our Sales and Support teams are available 24 hours by phone or e-mail to assist.

Latest Articles

In-place CentOS 7 upgrades

Read ArticleHow to use kill commands in Linux

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange the root password in WebHost Manager (WHM)

Read Article