How to Install and Use Containerization

What is Containerization?

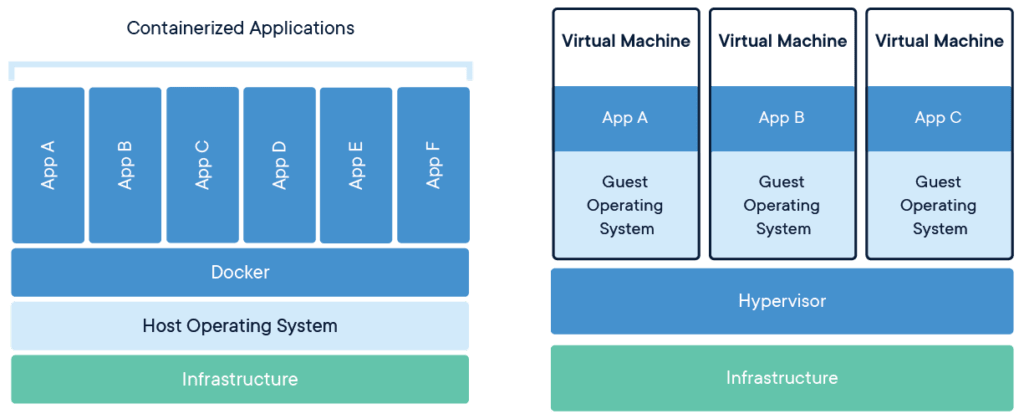

Containerization is a form of virtualized operating system developed as a response to the many problems of hardware-level virtualization. Because the latter runs a full-blown guest operating system, it is very resource-intensive and incurs a significant amount of overhead, but containerization is much lighter. Since the containers share the host machine’s kernel, the resources are not wasted on running separate operating system tasks. This allows for a much quicker and lightweight deployment of applications.

How does Containerization Work?

Containers encapsulate an application and all of its dependencies within its own environment, allowing it to run in isolation while using the same operating system and resources. However, each container interacts with its own private filesystem, which is provided by a container image. An image contains everything that is needed to run an application, such as the code, runtimes, dependencies, and any other filesystem objects that might be required.

Aside from its being lightweight, the popularity of containerization lies in many other points, such as its flexibility, as all applications are containerized. It is also portable so that the applications can be built locally and deployed to the cloud, which allows them to be run anywhere. Container images can be shared in many ways, and there are several application sharing hubs on the internet. Also, as mentioned previously, containers provide security, as they apply aggressive constraints and isolation to processes by default, without any need for configuration by the user. Finally, containers are self-sufficient and encapsulated, which means that they can be replaced or upgraded without disrupting other containers.

What is Docker?

Due to the popularity and widely accepted usage of containers, the need for standards in the container technology arose. Therefore, the Open Container Initiative (OCI) was established in June 2015 by Docker and other industry leaders in order to promote minimal and open standards for the container technology. While Docker is one of the most popular container engine technologies, there are other alternatives such as CoreOS rkt, Apache Mesos Containerizer, LXC Linux Containers, OpenVZ, and crio-d.

However, in this tutorial, we will focus on Docker, as it is a well-known and highly used containerization tool. It is an open-source project used by developers and sysadmins to automate the deployment of applications inside containers. Before we look into the installation process, we need to understand some of the terms that are used in this context.

Docker Engine

Clients and servers can be on the same server or on two or more separate servers. The communication between them needs to take place via REST API, UNIX sockets, or via a network interface.

The Docker engine is an open-source client-server application that is composed of a daemon with server functions, a programming interface based on REST, and a command line user interface. Some of the commands that are used in the command line interface are Docker build, pull, and run commands.

Docker Image

For any application to run, it needs other files, dependencies, and system libraries, not just the main application code. A docker image is a multi-level file that includes everything that is required for the application to be executable. These images can then be shared, used, and deployed anywhere else.

Docker Hub

In order to share these images, the community created a cloud-based repository of images called Docker Hub. We can pull images from there and download and use them on our servers.

Installing Docker

Installing Docker is reasonably straightforward, but we need to go through several steps before that. Firstly, we have to check if the CentOS-Extras repository is enabled, which should be enabled by default, but in case it was disabled, it needs to be re-enabled. We can check its status with the following command:

[root@host ~]# grep -m1 enabled /etc/yum.repos.d/CentOS-Extras.repo

enabled=1Since we see the number 1, it means it is enabled; if there had been a number 0 there, then it would have been disabled, and we would need to edit the file with nano or vim to change it to number 1.

Next, we need to uninstall old versions of Docker in case they were installed. The older versions of Docker were called docker or docker-engine and we can remove them, along with their dependencies, with the following command:

[root@host ~]# yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engineWe can continue with the installation if we get the message that none of these packages were installed in the first place or a message that they are now uninstalled.

Installation methods

Docker Engine can be installed in a few different methods, but we will focus on the first one. The recommended way is to set up Docker’s repositories and install them. This makes for an easy installation and upgrades.

In case of installing Docker on systems with no access to the internet, we can download the RPM package and install it manually. However, upgrades would have to be managed entirely by hand.

It is also possible to use automated scripts for the installation, which is useful In testing and development environments.

Since we will be installing Docker using the first option, we need to set up the repository before proceeding with the installation itself.

Setting up the Repository

First, we will install the yum-utils package just in case it hasn’t been installed already. It provides the yum-config-manager utility that we need in order to set up a stable repository. We can do so with the following commands:

[root@host ~]# yum install -y yum-utils

[root@host ~]# yum-config-manager \

> --add-repo \

> https://download.docker.com/linux/centos/docker-ce.repo

Adding repo from: https://download.docker.com/linux/centos/docker-ce.repoIt is also possible to enable or disable the nightly or test repositories with the yum-config-manager by using the --enable and --disable flags. They are both disabled by default, although they are included in the docker-ce.repo file.

Enabling and disabling the nightly repository can be done with the following commands:

[root@host ~]# yum-config-manager --enable docker-ce-nightly

[root@host ~]# yum-config-manager --disable docker-ce-nightlySimilarly, the test channel can be enabled or disabled with the commands:

[root@host ~]# yum-config-manager --enable docker-ce-test

[root@host ~]# yum-config-manager --disable docker-ce-testInstalling the Docker Engine

The Docker Engine package is now called docker-ce so we can install its latest version, along with containerd, with the following command:

[root@host ~]# yum install docker-ce docker-ce-cli containerd.ioInstalling a Specific Docker Version

If we want to install a specific version of Docker Engine, we can do so by specifying its fully qualified package name. If we do not know what versions we have available, we can list them out with this command:

[root@host ~]# yum list docker-ce --showduplicates | sort -r

docker-ce.x86_64 3:19.03.8-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.7-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.3.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.2.ce-3.el7 docker-ce-stableThe list we get depends on which repositories are enabled and what version of CentOS our system is running. Once we have the list, we can install the specific version by specifying the package docker-ce, then add a hyphen and the version string in the 2nd column starting at the first colon, up to the first hyphen. For example, a fully qualified package name would be docker-ce-19.03.8-3. In this case, we would install the specified version of Docker Engine and containerd with the following command:

[root@host ~]# yum install docker-ce- docker-ce-cli- containerd.ioStarting Docker

With the Docker Engine installed, we now have to start it. After that, we will also need to add the users to the docker group that was automatically created.

Docker can be started with the systemctl command:

[root@host ~]# systemctl start dockerNow that Docker is running, we can check if it was all done correctly by running the test hello-world image as a user, but we need to run them with sudo:

$ sudo docker run hello-worldThe docker run command downloads the specified test image and runs it in a container. It should print a message that Docker is installed correctly and give you some more information about using it.

Post Installation Setup

The docker group should already be present, so we have to do now is add our user to it in order to run docker commands without sudo:

[root@host ~]# usermod -aG docker $USERTo verify if we can run the command without sudo, we can try running this as a user:

$ docker run hello-worldIf everything completed correctly, this should once again show the message from earlier.

Using Docker

We can use Docker images that we can get from the Docker Hub, and in order to obtain them, we need to pull them first. If we do not know the exact name of the image we want, we can search for it with the command:

docker search image_nameOnce we find the one we want, we can download it with the pull command:

docker pull image_nameWe can use the images command to check what images we have downloaded:

docker imagesFor example, we can see that we have the hello-world image downloaded that we can use with the docker run command, as already shown previously.

[root@host ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest bf756fb1ae65 4 months ago 13.3kBConclusion

Containerization is one of the best methods to ensure compatibility across platforms and maintain the security and stability of multiple applications.

Would you like to know more about how you can implement this technology into your existing infrastructure? Give us a call today at 800.580.4985, or open a chat or ticket with us to speak with one of our knowledgeable Solutions or Experienced Hosting advisors to learn how you can take advantage of this technology right away!

Related Articles:

About the Author: Antonia Pollock

I would describe Antonia as a person who enjoys obtaining knowledge in different fields and acquiring new skills. I majored in psychology, but I was also intrigued by Linux and decided to explore this world as well. I believe there should be a balance in every aspect of life, and thus I enjoy both nature and technology equally.

Our Sales and Support teams are available 24 hours by phone or e-mail to assist.

Latest Articles

In-place CentOS 7 upgrades

Read ArticleHow to use kill commands in Linux

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange the root password in WebHost Manager (WHM)

Read Article