What is a Service Mesh?

What is Service Mesh?

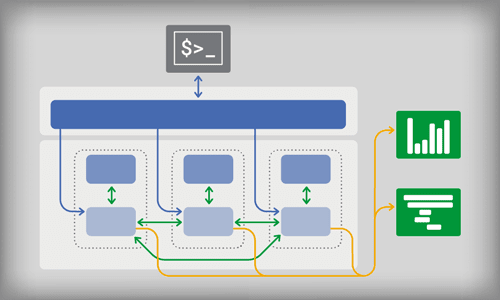

A service mesh is an additional infrastructure layer that provides a means of communication between all services in a given application. It is typically deployed as a series of proxies alongside each service instance. Since the service mesh proxies are deployed alongside the application services and not as part of it, they are often referred to as sidecars. This means that as a whole, these sidecar proxies are a mesh network and an infrastructure layer separate from the application. A service mesh not only brokers communication between all services in an application but, since all requests, both internal and external, pass through it, it provides a means for handling many tasks that can be obfuscated away from the application.

Why do I need a Service Mesh?

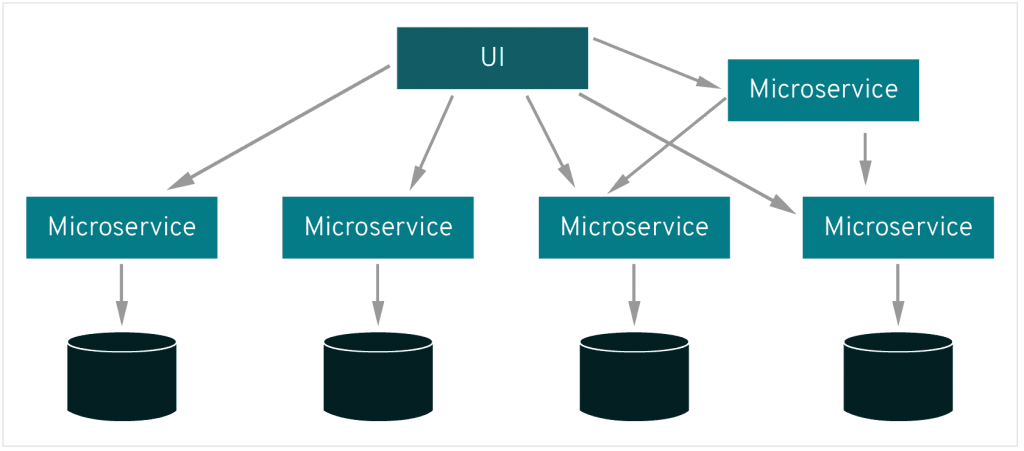

It is becoming increasingly common for high-availability applications to employ a microservice architecture comprised of services. Each service is responsible for something specific within the overall application. This kind of architecture offers flexibility in that each discreet service can employ different technologies, programming languages, and be optimized with their particular purpose in mind. Separation of concerns helps to streamline development by encapsulating functionality. It also lends to the ability to horizontally scale more quickly, making the addition of more services to handle more load straightforward.

As the number of services increases, their interconnectedness does as well. This is where a service mesh becomes appealing. Managing communication between all services in an extensive distributed application comprised of dozens of different services with hundreds or thousands of instances is no simple task. Before the existence of the service mesh, communication would need to be managed at the application level. Developers would need to consider and build into the application the mechanism by which each discreet service communicates. This would require an in-depth and overarching understanding of each individual service's dependencies as it pertained to the other services in the system.

What does a Service Mesh do?

Apart from facilitating communication between all services in a given application, service meshes provide many additional functionality and benefits.

Load Balancing

With an orchestration infrastructure like Kubernetes, there is a built-in service mesh concept using its service component that does some basic handling of service discovery and round-robin load balancing. This might work as a start but, as an application becomes more complex, a need for more robust load balancing will arise. It will not be enough to accept an incoming request and pass it along to the next available service.

A service mesh sees and analyzes all traffic within the application. This means that a service mesh can intelligently route traffic specifically to services or instances with a higher likelihood of fast response. Further, it is possible for a service mesh to intelligently remove a particular service from rotation based on latency thresholds. If a service has become unhealthy and is consistently responding slowly to requests, a service mesh will quietly pull that service out of rotation to be tried again later.

Service Discovery

Microservice architecture requires services to understand not only how to communicate with one another but, at a more fundamental level, services need to know that other services exist. A service mesh proxy, deployed sidecar with a container, make a nearly instantaneous registry of a new service available to the broader service mesh. This means that the more extensive application can make use of newly spun up resources at the drop of a hat.

Monitoring

A massive advantage of utilizing a service mesh with a microservice architecture is its means to monitor the services within the application. Similar to the dilemma of brokering communication between thousands of individual containers, accounting for providing the means to monitor a distributed application is a tough question to answer. Previously, it would have required developers to make the application aware of any monitoring infrastructure and how to communicate with that infrastructure. Considering the services' varied behavior with any given application, this might mean a library would have to be written to provide a means for some reusability in monitoring conventions across the different parts of the application.

A service mesh is continuously collecting information about all communications within the mesh and, therefore, within the application. This data is freely available, and service mesh implementations typically make it possible to drop that data into other services like Prometheus for inspection. There are traffic metrics from the proxy-level to the service-level, offering insight into latency, saturation, and errors. Service meshes may show distributed traces, which allow an in-depth understanding of service to service level requests as they move through the service mesh. A service mesh may also provide access logs to help users understand requests from an individual instance perspective.

Encryption

Another consideration of a microservice architecture is how to handle encryption. Requests flowing from one part of the application to another need to be encrypted and decrypted as they go. Managing this on the application layer is just another level of complexity from what might already be a complicated business logic meant to be executed by that service. A service mesh can once again obfuscate away this responsibility from the application layer. It can handle encryption and decryption, and where possible, it can increase performance by reusing persistent connections instead of making new ones. Creating new relationships is typically an expensive operation. Reducing the number of new connections necessary to facilitate communication between services is a massive benefit to using a service mesh.

Authentication

How does one service know where a request from another service is coming from? Should that request be processed? Has that request been vetted? These questions arise when considering the vast number of requests taking place in a microservice architecture. The application can handle authentication and authorization, but it is a complex problem to solve when so many disparate services need to communicate with one another. A sidecar proxy in a service mesh is already listening, looking for, and sending requests where they need to go. From this perspective, pulling authentication and authorization of requests out of the application and leaving it to the request's infrastructure is a definite win for the application. A developer no longer needs to be concerned with how one service will authenticate to another.

When to use a Service Mesh?

The answer to this question depends on the analysis of the application. A service mesh is typically most useful and easily implemented in a microservice architecture with some orchestration layer like Kubernetes. Istio, for example, can be easily deployed as a sidecar to the pods in a Kubernetes application and provide instant tangible benefits for the application in terms of routing, load balancing, monitoring, and more. For applications that do not employ some orchestration service to handle the provisioning of resources, implementing a service mesh is more complicated and might lose some of its luster as an out-of-the-box solution for sharing data between different parts of an application.

Conclusion

Ultimately, a service mesh is a potent facilitator for microservice-based highly orchestrated applications. It provides a clear delineation between the application and the communication infrastructure. This delineation keeps developers focused on developing the application insofar as pertaining to adding new features and bug fixes. It also helps operations teams carve out application management concisely.

We pride ourselves on being The Most Helpful Humans In Hosting™!

Our Support Teams are filled with experienced Linux technicians and talented system administrators who have intimate knowledge of multiple web hosting technologies, especially those discussed in this article.

Should you have any questions regarding this information, we are always available to answer any inquiries with issues related to this article, 24 hours a day, 7 days a week 365 days a year.

If you are a Fully Managed VPS server, Cloud Dedicated, VMWare Private Cloud, Private Parent server, Managed Cloud Servers, or a Dedicated server owner and you are uncomfortable with performing any of the steps outlined, we can be reached via phone at @800.580.4985, a chat, or support ticket to assisting you with this process.

Related Articles:

About the Author: Justin Palmer

Justin Palmer is a professional application developer with Liquid Web

Our Sales and Support teams are available 24 hours by phone or e-mail to assist.

Latest Articles

How to use kill commands in Linux

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange the root password in WebHost Manager (WHM)

Read Article