InterWorx Remote SFTP Backups with Rotation

Introduction

Far too often, we forget about backups until we need them, like in the case of a drive failure or the accidental deletion of a file or database. Taking frequent backups is a good policy to follow. Whether you choose to use one of your servers, an online backup service that provides SFTP access, or another method, having a cost-effective and reliable backup provides peace of mind.

This article talks about remote backups on InterWorx, the pros and cons of different methods, and the custom wrapper script to manage those remote backups on an SFTP server, and will provide a custom-coded solution to solving the backup rotation issue using scripts.

What is SFTP?

The Secure File Transfer Protocol (SFTP), sometimes referred to as SSH File Transfer Protocol, is both a protocol (defined standard of communication over a specific port) and the name of the Linux file transfer tool that supports the protocol.

SFTP allows for sending files over an encrypted SSH transport included in the SSH server service as part of the core abilities on a Linux system. It is widely used and supported by many other operating system varieties such as Microsoft Windows Server edition for the SFTP server portion.

Most modern file transfer tools support SFTP, such as FileZilla and WinSCP, amongst many others. In addition to most recent mid-range and high-end home internet routers that have a USB connection allow attaching an external USB drive for SFTP storage. Most modern routers run some variation of Linux for the core operating system regardless of the web interface.

In the early days of the internet, security was less of a concern, and File Transfer Protocol (FTP) was the standard for efficient file transfers over the internet. As more businesses and consumers began having an internet presence, adding security features became a more relevant and pressing concern.

While later versions of the FTP extensions incorporated Explicit FTP (FTPS), not all servers or clients support it. FTPS uses the non-standard port 990, while the standard port used for SFTP is port 21. This port is the same one that is used by the SSH service on most servers.

InterWorx has two SFTP ports: one for the default SSH service and another served by the ProFTP daemon on port 24. The distinction between the two is that the ProFTP daemon via the mod_SFTP module allows the SFTP service on port 24 to manage (add/delete) the SFTP users via the SiteWorx control panel.

A cPanel server prevents you from adding additional SFTP users to a cPanel account. On InterWorx, a new FTP user in your SiteWorx FTP account will automatically work as an SFTP user.

Prerequisites

This tutorial will require the following prerequisites:

- Interworx server

- SFTP server credentials

- Basic knowledge of bash scripts, variables, SSH, and troubleshooting

Pros and Cons of Available Backups for InterWorx

By default, InterWorx has a few different backup solutions currently available.

Each has its pros and cons.

Wrapper Scripts

A wrapper script is a script where all the command-line options are defined for a particular program when run. In this case, it calls a script to mount and unmount the remote SFTP server as a local directory, run the backups, and process the backup retention.

Use a wrapper script to rotate backups created from the built-in NodeWorx CLI tool /home/interworx/bin/backup.pex and an associated cron. An example cron will be provided later in the article.

| PROS | CONS |

|---|---|

| Can set custom backup retention. | Requires having the SFTP server credentials stored in a text file system. |

| Supports SFTP using an additional wrapper script. | It depends on additional tools, specifically the binaries for sshfs and expect. |

| The cron job and wrapper can be customized to meet your needs. | Restoring an account (and knowing how to use CLI tools) requires mounting the remote SFTP server directory or transferring the account back to the server using SFTP or SCP. |

| * Requires a moderate level of skill to debug an issue. | |

| Falls under the Liquid Web Beyond Scope Support Policy. |

Global Backups

Global backups for all SiteWorx accounts can be configured using the NodeWorx CLI tool /home/interworx/bin/backup.pex directly and scheduled via a cron.

| PROS | CONS |

|---|---|

| Backups of all accounts are created. | It cannot be managed or modified in the NodeWorx control panel. |

| Run efficiently. | It only works with a local file system. |

| There is no backup rotation, so older backups need to be manually removed or removed using a wrapper script. |

SiteWorx Backups

Account backups can be scheduled within each SiteWorx account.

| PROS | CONS |

|---|---|

| Each user can configure and schedule their own backups. | It needs to be configured for each user. |

| Full or partial backups (such as a database) can be created. | It does not support automatic backup rotation, so older backups need to be manually removed. |

| Supports local or remote backups using FTP and SFTP. |

NodeWorx Backup Plugin

The new NodeWorx Backup plugin can be enabled via NodeWorx > Plugins and selecting Siteworx-Backup-Cron.

| PROS | CONS |

|---|---|

| Integrated into the NodeWorx GUI. | Still in beta testing. The plugin must be manually enabled. |

| Actively developed to provide additional backup options. | Does not yet support remote backup destinations. |

| Supports being able to configure daily, weekly, and monthly backup retention values. | |

| Has an option to disable backups if the backup destination disk space falls below a set minimum percentage of free space. |

New Backup Method Using SFTP

To utilize the remote SFTP server backup wrapper, we have two software requirements:

- Expect: This tool is handy for scripting. It is most commonly used when you need to respond to a question from a program with a script. In this case, it is used to respond to the login request for the SFTP server with the password.

- SSHFS (SSH File System): This tool uses FUSE to mount the remote SFTP server to the local file system to treat the backups as if they are on a local drive.

Implementing Backups

Installation

Verify whether the Expect tool is pre-installed on your InterWorx server and then install SSHFS.

On any Red Hat Enterprise Linux (RHEL) CentOS-based server that InterWorx runs on, we can use either yum or rpm to verify the packages are installed.

To determine whether the Expect package is on the server, run the rpm command with the package named expect. The output below indicates the Expect package is not installed on the server.

$ rpm -q expect

package expect is not installedRun the same rpm command as before, but use fuse-sshfs instead of expect. Per the output, SSHFS is not installed on the server either.

$ rpm -q fuse-sshfs

package fuse-sshfs is not installedYou should see something similar to the following but most likely with different version numbers if both packages are installed. The versions won’t matter in this case.

$ rpm -q expect

expect-5.45-14.el7_1.x86_64

$ rpm -q fuse-sshfs

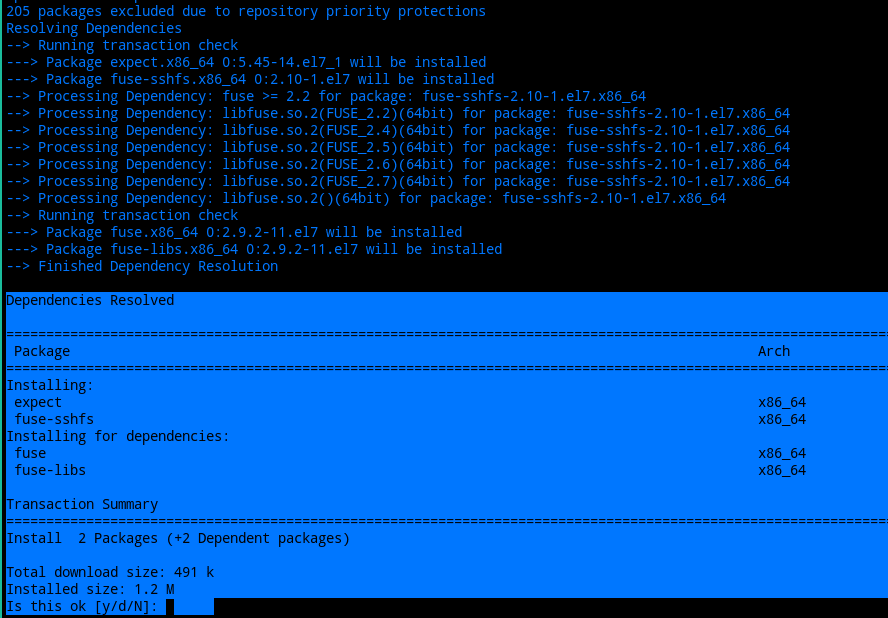

fuse-sshfs-2.10-1.el7.x86_64Most likely, you will need to install at least SSHFS. But you’ll likely want to try to install both packages mentioned. This will also install some additional packages for sshfs.

$ yum install fuse-sshfs expectWhen installing SSFHS, you should see something similar to the output below. As long as it’s not trying to remove any packages, generally, it is safe to proceed with the installation.

Application

After Expect and SSHFS are installed, create the file /root/backup_mount_sshfs.sh with the following content.

#!/usr/bin/expect

# If you need to debug this login change the above to end with expect -d

# Also worth mentioning if you add -d for debugging it WILL log the credentials. So please don't leave it enabled.

set env(LC_ALL) "C"

set env(PS1) "shell:"

# Set the login timeout

set timeout 30

# Set the login credentials for the remote SFTP server, hostname or IP, port, local and remote directories.

set user "$USERNAME"

set password "$PASSWORD"

set host "$HOSTNAME"

set port "22"

set local_dir "/backup/"

set remote_dir "backups/"

# The is to start a shell process and make sure it has a command prompt

spawn /bin/sh

expect "shell:"

# Next step is to call sshfs using the information we put above to mount the local "backup" directory. Currently I don't have it validate that but it's easy to add in.

# Also local_dir needs to match in /root/interworx-backup-wrapper.sh as well

# Send our login info above using expect and sshfs

send -- "sshfs $user@$host:$remote_dir $local_dir -p $port -o reconnect -o ServerAliveInterval=15 -o idmap=none -o nonempty -o StrictHostKeyChecking=No\r"

# Check for a password prompt and respond by entering the password

expect "password:"

send -- "$password\r"

# Delay one second for the login to complete and return to the shell prompt

sleep 1

# This checks to make sure we returned to the shell and that the sshfs mount completed basically

expect "shell:"From here, do the following:

- Set the $USERNAME, $PASSWORD, and $HOSTNAME values to the SFTP server information.

- Change the port if it is not port 22.

- Change remote_dir if applicable. A relative path is expected for the location.

Next, make the script executable.

chmod 700 /root/backup_mount_sshfs.shTest the SFTP login and mount process to make sure it is working.

BKDIR=/backup

mountpoint -q $BKDIR || /root/backup_mount_sshfs.sh ; [[ $? -eq 0 ]] && echo -e "\n\nSFTP server login completed."|| echo -e "\n\nSFTP server login failed <<<<<<<<"The above code indicates both successful and failed login messages. If the output ends with SFTP server login completed, then the login was successful. If it said SFTP server login failed, please see the troubleshooting section.

Verify that the backup directory mounts.

mount|grep $BKDIRIf that looks good, then unmount it.

umount $BKDIRCreate the file /root/interworx-backup-wrapper.sh with the following content.

#!/bin/bash

# InterWorx backup wrapper script

#

# Add to root crontab at preferred time. Packaged compressed, local backups only at this time. NFS or something like CBS for remote backups only.

#

# Configurations are required to use this script!

# How many Daily copies to keep.

# Daily Backups are currently Needed for this Script

DC=4

# How many Weekly copies to keep. 0 is disabled.

WC=0

# How many Monthly copies to keep. 0 is disabled.

MC=0

# Which Day to run Weekly Backups (0..6); 0 is Sunday

DAY_WEEKLY="0"

# Which Day to run Monthly Backups (1..31) 1 or 15 is best

DAY_MONTHLY="1"

# Local Backup directory. This is where SSHFS is mounting the remote location to

BKDIR=/backup/

#Ensure /backup folder exists before running the script

mkdir -p $BKDIR

#Backup Reporting - Pull the current Server Admin Email, replace $ADEMAIL needed.

ADEMAIL="$(nodeworx -u -n --controller Users --action listMasterUser | grep email | awk '{print $2}')"

# Just setting the date format here which will look like 20210310

date=`date +'%Y%m%d'`

# Create a file stub about this too about the SSHFS mount so people don't get confused

touch $BKDIR/"This is FUSE mounted as needed-See the MOTD"

# Set a variable for the full path and name of this script when run. This is used to update the MOTD dynamically below

_self="$(realpath $0)"

# Add an MOTD pointing to this script. Check if the MOTD already has something. If not add it in

if [ -f /etc/motd ]; then echo; else touch /etc/motd; fi; if grep -q FUSE /etc/motd; then echo; else echo -e "\nThis server is using a custom FUSE script for Interworx backups that mounts SSHFS from the backup script $_self\n\n" >> /etc/motd ; fi

# Mount the remote SFTP directory via # Mount the remote SFTP directory via FUSE using sshfs so we can treat it like a local directory. This requires sshfs be installed via YUM

echo "Running login to SFTP server standby"

mountpoint -q $BKDIR || /root/backup_mount_sshfs.sh ; [[ $? -eq 0 ]] && echo -e "\\n\nSFTP server login completed. Starting backup run"|| echo -e "\n\nSFTP server login failed <<<<<<<<"

function daily {

# Ensure Daily Folder Exists

mkdir -p $BKDIR/daily/

# Check if Daily Backups are Enabled

if [ $DC -gt "0" ]

then

# Ensure Backup Retention is kept

while [ $(\ls -p $BKDIR/daily/ | grep '^Daily_[0-9]\{8\}/$' | wc -l) -ge $DC ]

do ionice -c3 rm -rf $BKDIR/daily/Daily$(\ls -p $BKDIR/daily/ | grep 'Daily_[0-9]\{8\}/$' | head -n1 | sed 's/.*_/_/')

done

# Create New Backup's Folder

mkdir -p $BKDIR/daily/Daily_$date

# Create New Backup

/home/interworx/bin/backup.pex --backup-options all --domains all --output-dir $BKDIR/daily/Daily_$date/ --email $ADEMAIL --filename-format %D > $BKDIR/daily/Daily_$date/backup.log

fi

}

function weekly {

# Ensure Weekly Folder Exists

mkdir -p $BKDIR/weekly/

# Check if Weekly Backups are Enabled

if [ $WC -gt "0" ]

then

# Check if the current day is a Weekly Backup Day

if [ $(date '+%u') -eq $DAY_WEEKLY ]

then

# Cleanup all old Weekly backups over the set Weekly Count and create weekly snapshot.

while [ $(\ls -p $BKDIR/weekly/ | grep '^Weekly_[0-9]\{8\}/$' | wc -l) -ge $WC ]

do ionice -c3 rm -rf $BKDIR/weekly/Weekly$(\ls -p $BKDIR/weekly/ | grep 'Weekly_[0-9]\{8\}/$' | head -n1 | sed 's/.*_/_/')

done

# Copy Daily backup to the Weekly Folder

ionice -c3 rsync -aH --delete --link-dest=$BKDIR/daily/Daily_$date/ $BKDIR/daily/Daily_$date/ $BKDIR/weekly/Weekly_$date/

fi

fi

}

function monthly {

# Ensure Monthly Folder Exists

mkdir -p $BKDIR/monthly/

# Check if Monthly disabled

if [ $MC -gt "0" ]

then

# Check if the current day is a Monthly Backup Day

if [ $(date '+%d') -eq $DAY_MONTHLY ]

then

# Cleanup all old Monthly backups over the set Monthly Count and create Monthly snapshot.

while [ $(\ls -p $BKDIR/monthly/ | grep '^Monthly_[0-9]\{8\}/$' | wc -l) -ge $MC ]

do ionice -c3 rm -rf $BKDIR/monthly/Monthly$(\ls -p $BKDIR/monthly/ | grep 'Monthly_[0-9]\{8\}/$' | head -n1 | sed 's/.*_/_/')

done

# Copy Daily backup to the Monthly Folder

ionice -c3 rsync -aH --delete --link-dest=$BKDIR/daily/Daily_$date/ $BKDIR/daily/Daily_$date/ $BKDIR/monthly/Monthly_$date/

fi

fi

}

daily

weekly

monthly

# Backups are done by this point or should be. Unmount the remote backup directory

echo "running umount $BKDIR"

umount $BKDIR ; [[ $? -eq 0 ]] || echo "Warning umount $BKDIR failed"

echo "Backup script finished"Set the daily, weekly, and monthly desired retention values as shown below.

# Daily Backups are currently Needed for this Script

DC=4

# How many Weekly copies to keep. 0 is disabled.

WC=0

# How many Monthly copies to keep. 0 is disabled.

MC=0

# Which Day to run Weekly Backups (0..6); 0 is Sunday

DAY_WEEKLY="0"

# Which Day to run Monthly Backups (1..31) 1 or 15 is best

DAY_MONTHLY="1"Verify your email address is configured correctly to receive backup notifications.

nodeworx -u -n --controller Users --action listMasterUser | grep email | awk '{print $2}'If not, it will need to be set in NodeWorx, or you can manually configure an email address by modifying the following line.

ADEMAIL="$(nodeworx -u -n --controller Users --action listMasterUser | grep email | awk '{print $2}')"An example would be backup-notifcations@example.com, as shown below.

ADEMAIL="backup-notifcations@example.com"Make the backup wrapper script executable by running the chmod command.

chmod 700 /root/interworx-backup-wrapper.shTest the backup script. You may want to run this in a screen session as it will take some time to run.

/root/interworx-backup-wrapper.shIf everything looks good and the backup is completed, set up the desired cron job.

For example, we want the backup to run on Sundays and Wednesdays at 1:00 a.m. server time and output a log with a timestamp.

First, we have to make that directory for the backup logs.

mkdir -p /var/log/backupThe root cron entry will look similar to this.

# Remote backup cron

0 1 * * 0,3 /root/interworx-backup-wrapper.sh > /var/log/backup/backup_$(date +\%F).log 2>&Troubleshooting

You may run into problems during the backup process. Look through the below tips to help you troubleshoot any errors.

Unable to Log Into the SFTP Server

Things to check:

- Is the SSH port correct?

- Is the SSH port in TCP_OUT in /etc/csf/csf.conf?

- Are you able to use SFTP to log in manually?

- Does the password have a character that needs to be escaped?

The Backups Are Not Running/Working

Things to check:

- Verify that the cron was added as the root user.

- What does the backup log in /var/log/backup/ show? There can be several causes as to why the backups are having issues.

If you are still not sure, please reach out to us in a ticket, and we will be happy to help.

Conclusion

Having regular backups provides peace of mind in case something happens on your server. There are multiple methods for obtaining backups, but each has its own pros and cons. To learn more about InterWorx and to get started visit our InterWorx product page. We also offer a variety of other managed hosting services, including dedicated server hosting, VPS hosting, and private cloud VMware hosting.

Related Articles:

About the Author: Jamie Sexton

Jamie Sexton is a Linux Systems Administrator at Liquid Web. Jamie has a passion for developing solutions to complex issues and a strong desire for tinkering with all things in a hands-on fashion like computer hardware and software for both Linux and Windows.

Our Sales and Support teams are available 24 hours by phone or e-mail to assist.

Latest Articles

How to use kill commands in Linux

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange cPanel password from WebHost Manager (WHM)

Read ArticleChange the root password in WebHost Manager (WHM)

Read Article